MCP, Part I: To MCP or Not (MCP)

Why Model Context Protocol, a new building block for AI Developers, is the AI Community’s latest hot topic

TLDR: The Model Context Protocol (MCP) is a promising development in the AI world because it tackles a very pragmatic problem: how to connect powerful AI models with the wealth of internal knowledge and third-party tools they need to be truly useful. In this post, we’ll explore what MCP is, what its origins are, why it’s useful for developers, and why MCP has huge implications for enterprise AI adoption.

Introduction

Large language models (LLMs) have become incredibly powerful, but they often operate in isolation. One of the biggest challenges in developing AI applications is giving these models the context they need from external data sources (documents, databases, APIs, etc.) in a reliable and scalable way. Traditionally, each new integration between an AI assistant and a data source required a custom connector, creating an ever-growing web of bespoke integrations that’s difficult to maintain.

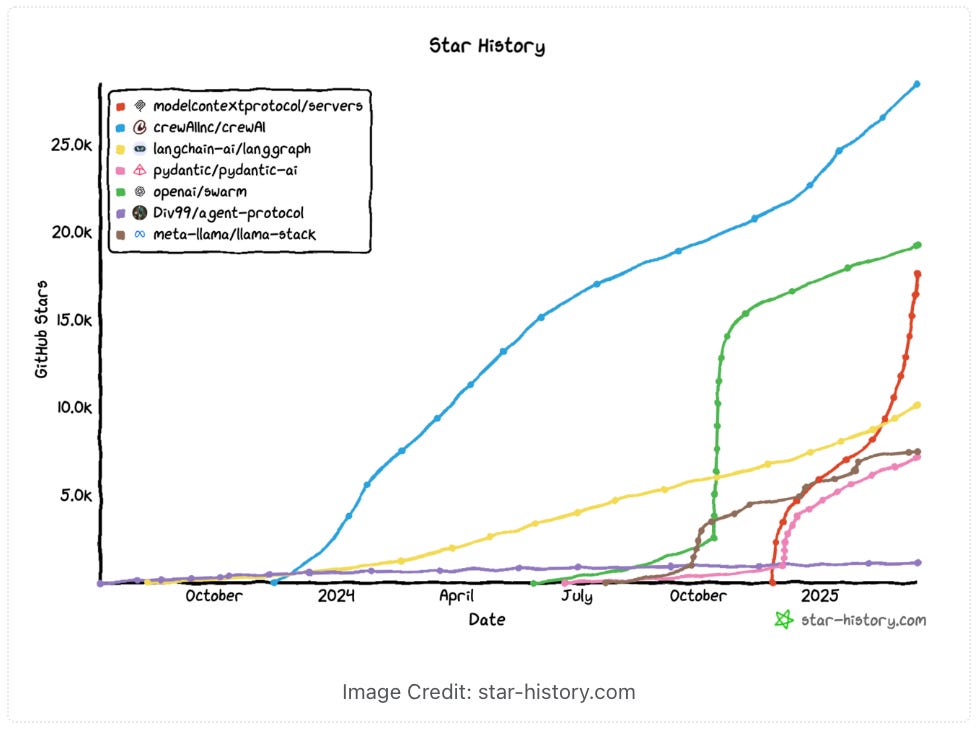

To address this, developers at Anthropic introduced the Model Context Protocol (MCP) in November 2024. MCP is a universal, open standard designed to bridge AI models with the places where your data and tools live, making it much easier to provide context to AI systems. Despite receiving little fanfare upon its initial release, MCP has recently exploded in popularity – already passing LangChain in GitHub Stars and on pace to soon overcome OpenAI and CrewAI. Major AI labs and open-source communities have both rallied behind MCP in a big way. Why? Let’s dive in below.

1. What is MCP?

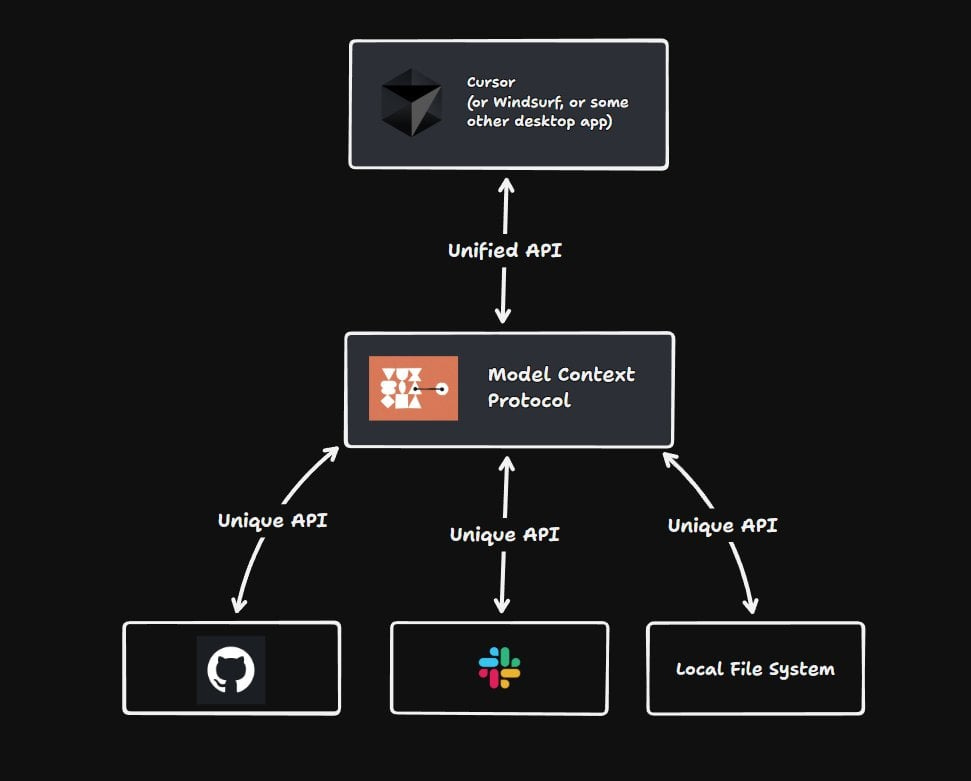

Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context to AI models (particularly LLMs). In other words, it’s a framework that defines a common language for connecting AI assistants to external data sources and services. Anthropic aptly describes MCP as “like a USB-C port for AI applications” – a universal connector that lets AI models plug into various tools and databases in a consistent way. Just as USB-C standardized how we connect devices, MCP standardizes how AI systems interface with different data sources and functionalities.

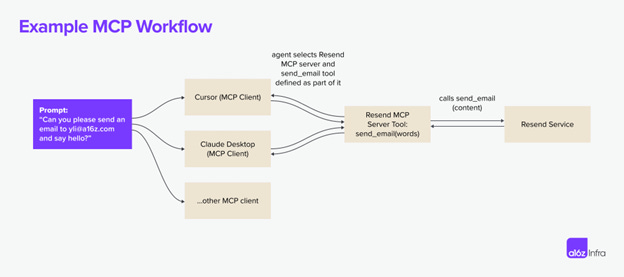

The protocol defines how the AI model can call external tools, fetch data, and interact with services. As a concrete example, below is how Resend, an AI-native email app for developers, uses its MCP server to orchestrate across multiple MCP clients.

Source: a16z enterprise

The purpose of MCP is to break down the silos between AI models and the vast information they may need. It enables developers to set up secure, two-way connections between AI-powered applications and the systems where data lives. For example, with MCP, an AI assistant could retrieve a document from your knowledge base, query a database, or call an external API – all through a single protocol.

2. Origins of MCP

Early Days: From Anthropic, with Love

The MCP protocol was conceived and built by two software engineers at Anthropic, David Soria Parra and Justin Spahr-Summers. If you haven’t seen it, David shared the origin story in a Latent Space podcast episode, and it’s a recommended listen.

When you look closer, you see that the “AI integration” problem is an MxN one. You have M applications (like IDEs) and N integrations.

Source: Latent Space

MCP was developed as a response to a growing problem in the AI field - the lack of a common standard for connectors. Each new pairing required customization, making it difficult to scale and maintain AI systems in real-world applications. This led to what Anthropic engineers call the “M×N problem” — the compounding headache of connecting M different AI models with N different tools or data sources. Seeing this pain point, Anthropic designed MCP to become a universal standard.

Source: Anthropic

Rapid Industry Adoption

The beauty of MCP is that it’s not just for Anthropic or OpenAI’s models. MCP is open and extensible, meaning anyone can adopt it. That’s part of its charm—it’s not a walled garden.

Early adopters like Block (formerly Square) and Apollo.io integrated MCP into their systems during its initial launch. While others in the market took longer to come around, MCP eventually began to pick up steam from all the major IDEs. Companies like Zed, Cursor, and Windsurf (formerly Codeium) announced MCP support in early 2025, with dozens of household names following shortly thereafter.

Source: X (@Sama)

OpenAI officially announced MCP integration with its Agents SDK in March 2025, with CEO Sam Altman tweeting his enthusiasm for MCP. This put the hype into overdrive, as rarely do the Red Sox (Anthropic) & Yankees (OpenAI) agree to play nicely.

3. Why Use MCP?

Why should enterprises use MCP? In short: providing context to AI models has been challenging and MCP offers a strong solution to those challenges.

Why should developers use MCP? See below for my two cents:

Less Custom Integrations: Before MCP, if you wanted an AI model to access a SharePoint site, customer database, or Slack, you’d need to implement three different plugins or connectors – each with its own custom code.

Context Quality and Relevance: The smartest AI is only as good as the data it has access to. Without easy access to live context, models will give generic or outdated answers because they’re not able to see the most recent data.

Interoperability: In an AI landscape that’s constantly evolving, it’s common to experiment with different LLM providers or tools. Without a standard protocol, this means re-integrating data sources and building new connectors each time.

Lower Maintenance Costs: Custom integrations don’t scale. Every time an API changes or you want to adopt a new AI model, the work must be redone. This becomes a nightmare to maintain over time.

Improved Cybersecurity and Privacy: Providing context often means giving an AI access to sensitive data. Because MCP is an open protocol, you can host MCP servers within your own infrastructure, keeping data within your environment.

4. What does MCP Mean for AI Adoption?

MCP may not be as flashy as the latest LLM, but it’s arguably just as important. As foundational models have gotten more intelligent, the ability for AI Agents to interact with external tools, data, and APIs inherently has become increasingly fragmented.

“Even the most sophisticated models are constrained by their isolation from data – trapped behind information silos and legacy systems.” Anthropic, on why context integration matters

For developers, this means needing to implement agents with custom business logic for each system agents seek to connect with. That’s why the context integration that MCP provides is huge for developers.

So, what does this mean, in practice? Consider the example below of an AI coding assistant like Cursor or Windsurf. Both of these IDEs are increasingly rolling out agentic capabilities, which allow them to suggest edits to multiple files at once. However, in order to actually take advantage of these capabilities, a small army of bespoke connectors is required, a major buzzkill for AI developers.

Now with MCP, Cursor and Windsurf developers have access to a shared protocol that lets their IDEs access external tools on their behalf. To use a consumer metaphor, AI developers effectively have a universal remote control (MCP) to connect to all of their digital devices and services.

MCP offers a win-win: better performance and capabilities for AI models, and improved efficiency and safety for developers and organizations. By adopting MCP, enterprises can build AI solutions that are easier to maintain.

It’s hard to imagine vendors not scrambling to add MCP servers – already we’ve seen a flurry of announcements by the foundation model companies and startups across the AI tool stack. Whether MCP remains relevent long-term is anyone’s guess, but I’m excited to see how things shake out.

Until then, to MCP or Not (MCP), that is the question.

Note: Stay Tuned for Next Week’s Article: MCP Part II

Disclaimer: The information contained in this article is not investment advice and should not be used as such. Investors should do their own due diligence before investing in any securities discussed in this article. While I strive for accuracy, I can’t guarantee the accuracy or reliability of this information. This article is based on my opinions and should be considered as such, not a point of fact. Views expressed in posts and other content linked on this website or posted to social media and other platforms are my own and are not the views of NextEra Energy Investments (NEI) or NextEra Energy (NEE: NYSE).